MACHINE LEARNING - DAY 2

LINEAR REGRESSION

Notations:

m: number of training sets

x: input variable/feature

y: output variable/ target variable

( x(i) , y(i)): ith training set

Linear regression is mostly used in supervised learning and with the given data-set, our aim is to learn a function or a hypothesis h: x -> y, so that h(x) is a “good” predictor for the corresponding value of y.

Hypothesis for linear regression:

hΘ(x) = Θ0 + Θ1x

Or

Or

h(x) = Θ0 + Θ1x

Where,

h(x): hypothesis for the problem

Θ0: constant or the intercept

Θ1: the slope of the line

COST FUNCTION

The accuracy of the prepared hypothesis can be found out by using a cost function. This takes an average difference of all the results of the hypothesis with the inputs from x and the actual output y.

Given below is the required cost function:

The function is called Squared Error Function.

Now, hΘ(x(i)) - y(i)) is the difference between the predicted results for the input x and the real output y. Taking summation of it will provide the total difference between the predicted output and the real output.

Here 1/2 is taken to simplify the calculations which we will see in gradient descent.

AIM: To minimize the cost function J(Θ0, Θ1)

Hypothesis : hΘ(x) = Θ0 + Θ1x

Cost function :

To minimize the cost function or to get the best fit the line should pass through all the values in the result set. In such a case

J(Θ0, Θ1) = 0

as the distance between the prediction value and the actual value is zero.

Note: for Θ0 , Θ1 and J(Θ0, Θ1) we plot contour plots as they are 3D plots and hence are used to plot 3 values. The smallest circle in the contour plot, when shown in 2D, depicts the global minimum which is the perfect fit for the given hypothesis.

Now the question arises how to find the accurate (Θ0, Θ1) values??

The solution for the above question is a technique called Gradient Descent which is our next topic.

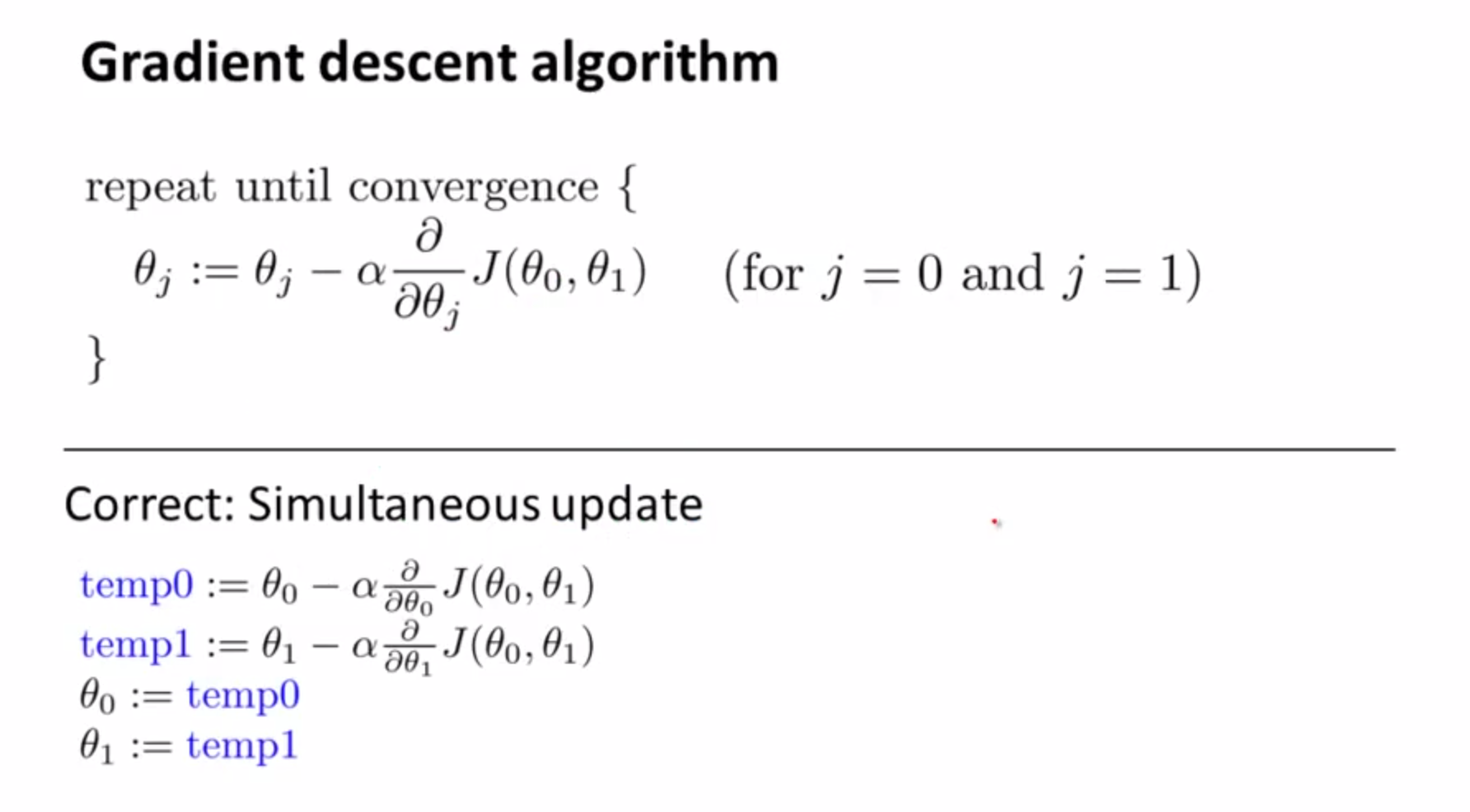

GRADIENT DESCENT

Gradient Descent is used to find the Θi values for i = 0,1,….n to minimize the cost function J(Θi).

Formula of Gradient Descent :

Functioning of Gradient Descent:

Note: If α is too small then it will take a lot of time to converge to the global minimum.

Note: If α is too large then instead of converging to the global minimum it will start diverging.

So the choice of α i.e., the learning rate is very important.

Now since for every next value of (Θ0, Θ1) the gradient descent algorithm is executed and hence the partial derivative is taken which leads to updating the values of (Θ0, Θ1) simultaneously after each iteration.

Now let’s see how the parameter i.e.,(Θ0, Θ1) values are found

In the first case, the point A has a positive slope which depicts the value of partial derivative of J(Θ0, Θ1) and so the gradient formula decreases the value of (Θ0, Θ1) as

Θ0 - (+ve) = decrease in value of Θ0

Θ1 - (+ve) = decrease in value of Θ1

and finally it will reach to point B which is the global minimum.

Similarly, in the second case, point A has a negative slope which depicts the value of partial derivative of J(Θ0, Θ1) and so the gradient formula increases the value of (Θ0, Θ1) as

Θ0 - (-ve) = increase in value of Θ0

Θ1 - (-ve) = increase in value of Θ1

and finally it will reach to point B which is the global minimum.

As the point reaches to point B, the derivative will come 0 because the actual output and the predicted output are same and hence derivative of a constant is 0, so the value of (Θ0, Θ1) will not change any further and that’s the required values for our parameters.

In this way gradient descent help in figuring out which value set for (Θ0, Θ1) would suit the hypothesis for the best fit.

This process is also called Batch Processing since for every computation for (Θ0, Θ1), the process looks upon the entire batch of data until and unless it finds the global minimum.

That's all for day 2. Next we will learn about linear regression with multiple variables in DAY 3.

If you feel this article helped you in any way do not forget to share and if you have any thoughts or doubts upon it do write them in the comment section.

Till then Happy Learning..

No comments:

Post a Comment